Training GANs on Smaller Data Sets

Training GANs on small datasets can oftentimes lead to overfitting of the training dataset (mode collapse). A new paper from Tero Karras and other Nvidia colleagues talks about approaches to solve this problem.

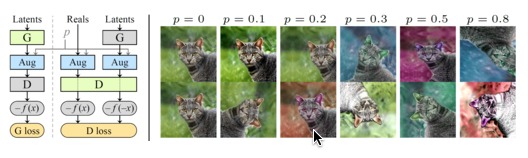

The new approach, called Adaptive Discriminator Augmentation, deals with designing a strategic data augmentation regime to help the GAN training deal with the smaller dataset used for training.

Now if you are a fastai person, this kind of sounds like what Jeremy has been teaching in the fastai course. How to be clever with generating randomized augmented variations of your input data on the fly during training. And indeed the fastai api is setup to make that not only easy to do, but allows you to run that randomized data augmentation on the GPU.

There is a githib site devoted to this work, called the StyleGAN2 with Adaptive Discriminator Augmentation (ADA). It also contains an official tensorflow implementation that supersedes the previously published StyleGAN2 work.

Here's a link to their paper Training Generative Adversarial Networks with Limited Data.

Comments

Post a Comment