GhostNet - Speeding up Computation by Reducing Redundancy

Imagine that you could restructure your neural net architecture to achieve a 40% speedup. Sounds interesting, right?

GhostNets are deep learning neural net architectures that introduce additional layers that are cheap computationally (think linear layers). The idea is to generate more feature maps from the additional cheap layers.

The GhostNet team noticed that there seemed to be a lot of redundancy in the feature map representations generated by a neural net architecture. Some specific feature maps just seems to be clones of other ones (ghosts). They looked at ResNet-50 architecture's first block, and trained a linear net to learn the mapping between them.

They then hypothesized that you don't need to spend computation generating so many unique feature maps in each block in the neural net. Instead, you can calculate a few 'intrinsic' maps and then use cheap and fast linear operations to approximate the rest of the 'ghost' maps.

In their paper they show example of feature maps in the 2nd layer of the VGG-16 architecture, and then show how to ghost map approximate them. So they use a convolution layer for half of the features in layer 2, and then use a linear layer to ghost map the other half of the layer 2 feature map.

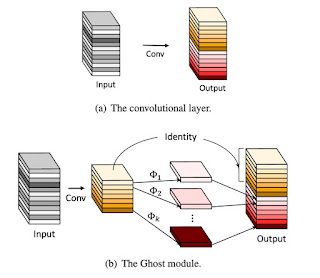

They then go on to propose a ghost module that is an alternative to a normal convolution layer. The diagram below from their paper shows how they do this. they do half of the normal convolutions, then use linear 'ghost' layers to generate the same sized output of the original convolutional layer.

Here's a paper on 'GhostNet: More Features from Cheap Operators'.

Here's a blog post overview article on 'How to Ghost your Neural Net'.

It mentions at the end that ghosting is similar to data augmentation. Instead if creating good enough training images to expand your training set, you generate good enough feature maps for each layer.

Here's another good overview article on GhostNet on the Paperspace blog available here.

There is a GitHub depository for GhostNet available here that is based on tensorflow.

HTC of course prefers PyTorch, and GhostNet is available for PyTorch as well here.

Comments

Post a Comment