Swapping Autoencoder for Deep Image Manipulation

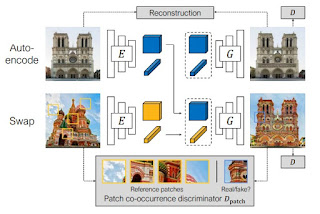

There's a new paper out of Berkley that proposes something called a 'Swapping Autoencoder' for neural net image manipulation. This research is funded by Adobe, and they are specifically looking at alternatives to GAN's for image manipulation.

You will note the global vs local split in the algorithm and discussion of it, which can also be thought of as 'content' vs 'style'. So this is a paradigm we have seen over and over again in things like 'style transfer'.

The technique is fully unsupervised, so no class labeling is required.

They are using a patch based method, which is something we have seen in a lot of the recent Berkley work.

Here's an overview video someone made that helps explain what is going on.

Here's a link to the paper.

Here's another short video from the project page showing how they are approaching adding this to a 'Photoshop style' interface for artists to use in their work.

Here's a link to some PyTorch code to implement this model.

Comments

Post a Comment