RepVGG Convolutional Neural Net Architecture

There's an interesting new paper out called 'RepVGG: Making VGG-style ConvNets Great Again'. Were they ever not great? I guess in today's new architecture of the month mad dash of deep learning research, they are old news.

But oftentimes the mad dash is all about just using larger and larger models, or a new architecture so you can get your new paper published. There is value in rethinking old architectures, especially if by restructuring them you can get them to train and run better. Because then you might actually understand something about how they work internally. Which is the ultimate goal.

The abstract lays out very clearly why it's worth understanding what is going on in Rep VGG.

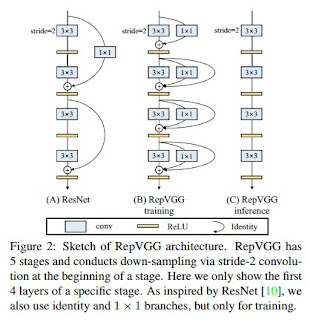

We present a simple but powerful architecture of convolutional neural network, which has a VGG-like inference-time body composed of nothing but a stack of 3x3 convolution and ReLU, while the training-time model has a multi-branch topology. Such decoupling of the training-time and inference-time architecture is realized by a structural re-parameterization technique so that the model is named RepVGG. On ImageNet, RepVGG reaches over 80% top-1 accuracy, which is the first time for a plain model, to the best of our knowledge. On NVIDIA 1080Ti GPU, RepVGG models run 83% faster than ResNet-50 or 101% faster than ResNet-101 with higher accuracy and show favorable accuracy-speed trade-off compared to the state-of-the-art models like EfficientNet and RegNet.

Here's a link to the paper.

Here's a link to their github site with PyTorch (yeah) code implementation.

Comments

Post a Comment