Diving into Generative Adversarial Networks

We've been talking a lot about GANs here at HTC. Let's take a deeper dive into understanding how they work, and what you can do with them. I'm going to be presenting some material here from a number of different sources. I'll include links back to those sources so you can check them out in more detail at your leisure.

The block diagram below shows off the architecture of the Generator in a DCGAN. DCGAN stands for Deep Convolutional Generative Adversarial Networks. It's from a 2016 paper on the DCGAN architecture.

You may recall from previous HTC posts on GANs that a GAN architecture consists of 2 different parts, the Generator and the Discriminator. The Generator takes a random noise input and generates an image as it's output. The Discriminator looks at an image and determines whether the generated image is real or fake. So it's a binary classifier net that takes an image as it's input, and says 'yes' or 'no' as it's output (yes the input image is real, no the input image is fake).Research on GANs has been exploding the past few years. Ian Goodfellow has a good slide to show off this progress that he presented in the GANs for Good panel discussion we posted here.

So right away you can see the progress made since 2014 to 2018 for the specific example of generating facial images of people, which is pretty dramatic. And it continues to improve. Here's a more recent example below which is output from the StyleGAN2 architecture.

The quality of the artificial or fake images generated by the StyleGAN2 architecture is pretty amazing. In some sense it enters the realm of being magical. What is going on in systems like this one is really fascinating.

Jonathan Hui has another great Medium overview article that get's into much more of the specifics of how the StyleGAN2 architecture works.

Earlier we said that the Generator in a GAN takes a random noise input and generates a complete image as it's output. But there is another way to conceptually think about the input vector to the Generator. We can think of it as a latent factor z.

So what does that mean, and how can we take advantage of this expand conceptual viewpoint for how a GAN works.

In our recent post of the Deep Generative Modeling lecture from the MIT Intro to Deep Learning bootcamp, Ava showed the follow slide that talked about Latent Variable Models. It's contrasting 2 different generative neural net models, the Variational AutoEncoder (VAE) and the Generative Adversarial Network (GAN).

The orange block in both systems is the latent variable part of each system. You may recall from the discussion of how VAE works that you can think of it as a 'compression - decompression' system. The left half of the net takes an image and compresses it. The right side decompresses it. So the orange part, the latent variables, can be thought of as the compression coefficients in some sense.

If you have this world view in your head, then you can see that the GAN Generator is acting like the right side of the VAE net. It starts from a latent variable representation, and then generates an image off of that. So in a vanilla GAN architecture, the random noise input is actually the latent variable. Fascinating.

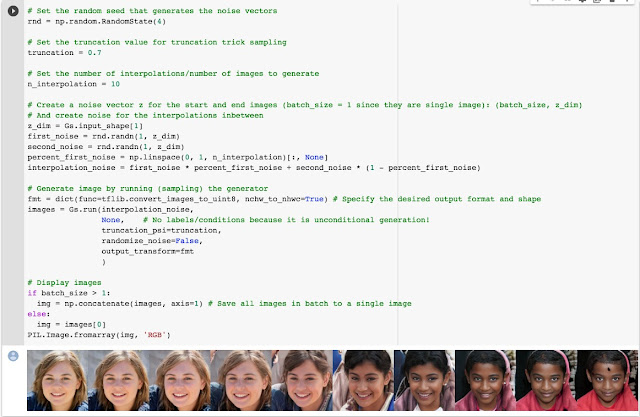

To get a feel for what i mean by this, we're going to linearly interpolate between 2 different random number inputs, and then use that as the input to a trained GAN Generator (that was trained to generate facial images). The screenshot below is from a Jupyter notebook i was working with to try this out. The result at the bottom is what i got when i ran the cell.

So the far left image is what the generator output for a specific random number. The far right image is that the generator output for a second random number. The images in between were just generated by linearly interpolating between the 2 different random numbers. Note that we're interpolating inside of the latent space of the GAN Generator.

In practice there are probably going to be a lot of different perceptual factors associated with the output images you might want to control. And the issue is that they are all jumbled together inside of the learned latent space. So you want to try and un-jumble them, so that they are more controllable. A lot of the StyleGAN paper has to do with how you do that de-jumbling. Hint, you can use another neural net to do it.

Here's an example of what we mean by using a neural net to disentangle the latent space. The left side shows the input to the StyleGan Generator, so the latent space z is input to the GAN architecture. Remember, latent space z is just the noise vector used for the input to the GAN Generator.

The right side show how you can use another neural net called a mapping network to transform the latent space z into what we will call intermediate latent space w(z). So it's another noise vector, and we can use it for input to the GAN Generator instead of then the original z latent noise vector.You can think of what we just discussed as an introduction to a really fascinating topic. How do we add user adjustable parameter knobs to a deep learning system?

Think about the different kinds of deep learning systems you have been exposed to. A deep learning system that can tell whether an input image is a dog or cat. A deep learning system that can tell you what objects are inside of an image (contains person, cat, car). A deep learning system that looks at a radiograph and says if cancer is there or not. All of these different examples are systems that have an input and an output, but there are no knobs a user of said system could adjust to change it's behavior.

The trick to adding knobs is to come up with different approaches to tinkering with and modifying or adjusting the latent variables of the system. We're going to be diving more deeply into this topic in subsequent posts.

Let's take a look at a short video that shows off some of what we've just been talking about.

The video starts off by showing video examples of the latent space interpolation i showed off above. Seeing it as a moving video is pretty cool. It looks like an image morph, but remember they are really just interpolating between different random numbers inputs to the GAN Generator to create what you are seeing in the first part of the video.

Another feature of the StyleGAN architecture is that they can inject additional random noise into different layers of the Generator. This allows for coarse or fine variation of the generated images from the GAN Generator. The video shows this off as well.

Here's an interesting question to think about. We showed that a GAN Generator is taking a latent representation and converting it into an image. But suppose we have a specific image, and we want to understand where it lies inside of that latent space. How could we figure that out?

In the analogy we were discussing above, we want to find the unique random number that if used as input to the GAN Generator will cause the Generator to output our specific image.

Ok, i think we've gotten to the point where we can let Xander from Arxiv Insights get us super pumped about how GANs in general and then specifically StyleGAN works.

So after watching that, i hope you are very excited. I know i am. Again, we're specifically talking about working with images here, but the underlying principles can be applied to many different problem domains. You should also have a much better idea about how to answer the question i asked before we got to this video, how can we take a specific image and map it to a specific location in the latent space of a GAN.

Here's a link to the StyleGan paper Xander is referring to.

Here's a link to the excellent GAN blogpost Xander mentioned on GAN Objective functions.

Now this objective function blogpost is fro 2018, and is missing some of the more recent things, like using attention. And as you think back to how bad the original GAN output images look, and how they got better over time, what objective function(s) were used in the training has a big impact.

Always keep in mind that your deep learning system is only going to learn what you are telling it to learn, and that is based on the objective function.

Jeremy brings this up as well in some of the fastai lectures we'll be listening to later in the HTC 'Getting Started with Deep Learning' course. Some of the original work on using deep learning for image interpolation used the mean square error for optimization, and ended up with blurry output because of that.

Something to think about

Because i have a background in Human Visual Modeling, i keep wondering why more human vision system relevant models aren't being used in deep learning system. It's no secret that mean square error doesn't really represent human perception of images.

Also, many people use transfer learning to help speed up the whole GAN training process. So you start with a pre-trained deep learning system, usually one trained on ImageNet, and then build your new training off of that. In the fatai course, this trick is used everywhere to speed things up.

But the StyleGan2 paper points out some research that shows that neural nets trained on ImageNet tend to focus on using texture features in the latent space they are generating internally. While people perceive shape as being an important component as well.

For a recap, let's finish up with a quick 4 minute overview of the StyleGAN2 work.

Comments

Post a Comment